What Are Autonomous Systems?

Definition

An autonomous system is one that can achieve a given set of goals in a changing environment—gathering information about the environment and working for an extended period of time without human control or intervention. Driverless cars and autonomous mobile robots (AMRs) used in warehouses are two common examples.

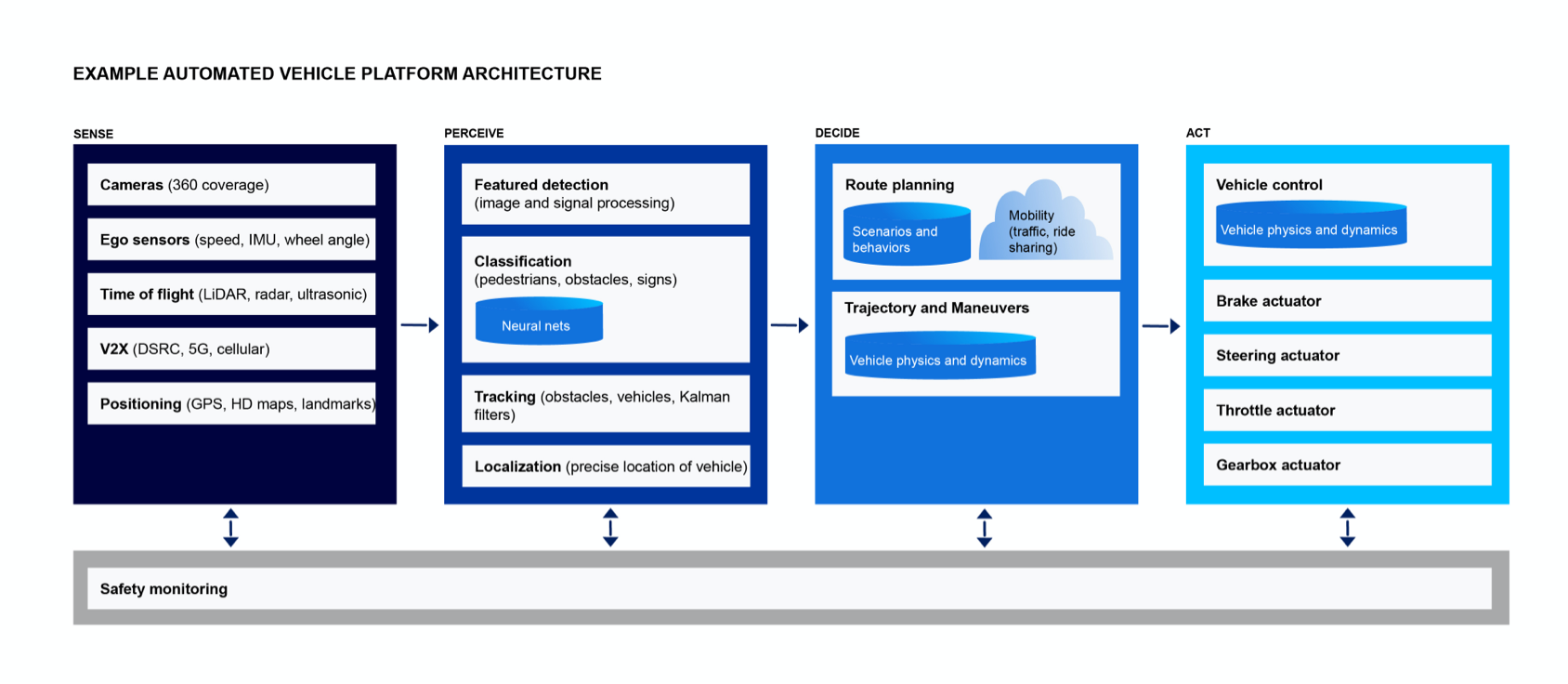

Autonomy requires that the system be able to do the following:

- Sense the environment and keep track of the system’s current state and location.

- Perceive and understand disparate data sources.

- Determine what action to take next and make a plan.

- Act only when it is safe to do so, avoiding situations that pose a risk to human safety, property or the autonomous system itself.

An autonomous system can sense, perceive, plan and act without intervention.

Autonomous vs. Semi-Autonomous Systems

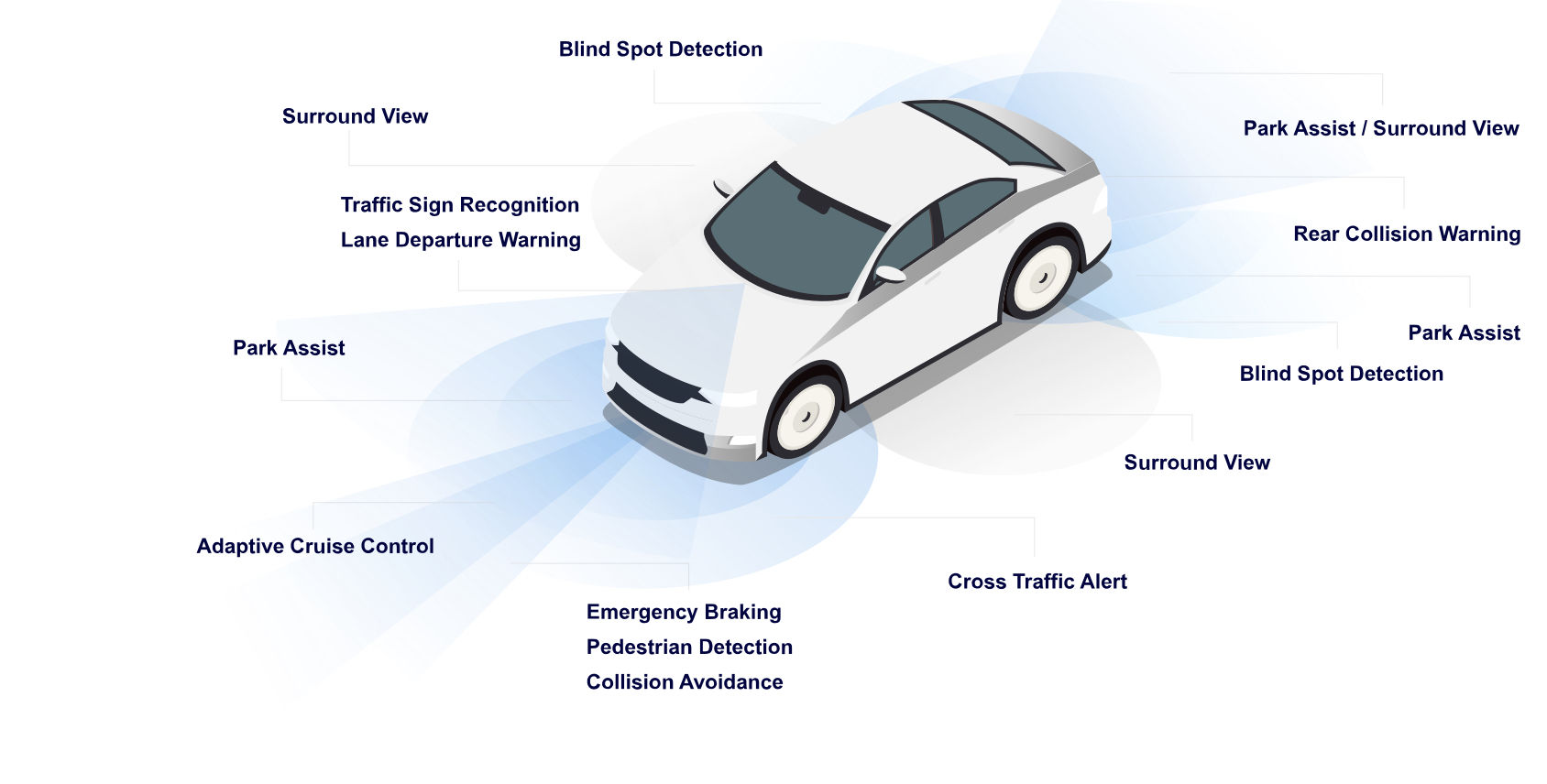

The vast majority of current systems known as autonomous are semi-autonomous rather than fully autonomous. Cars with driver support systems such as lane keep assist and advanced braking systems are semi-autonomous, as are robotic surgery systems, robot vacuums and most unmanned aerial vehicles (UAVs and drones). Most fully autonomous systems, such as driverless cars, remain too costly, data-intensive, power-consuming or unsafe for widespread public use.

Significant progress is being made. Electric vehicles, autonomous vehicle perception systems and connected car platforms have passed Gartner’s "peak of inflated expectations" and through the "trough of disillusionment." These systems have reached the "slope of enlightenment" in the Connected Vehicle and Smart Mobility Hype Cycle.

Examples of Autonomous Systems

Video of the first communication in Canada between an autonomous vehicle and live city infrastructure on a public road.

Autonomous Vehicles

Both passenger and commercial vehicles include autonomous capabilities, which exist on a continuum, as identified by the six levels of driving automation defined by SAE J3016:

- SAE levels 0–2 provide driver support, ranging from warnings and momentary assistance to collision avoidance features, such as autonomous steering, braking and acceleration support.

- SAE levels 3–5 provide fully automated driving features that range from those suitable for limited conditions or applications, such as a traffic jam or local taxi service, to those that enable a driverless vehicle to take passengers or cargo anywhere and in all conditions.

Many passenger vehicles in widespread use meet SAE J3016 Level 0, 1 or 2.

Autonomous vehicles will account for 15 percent of global light vehicle sales by 2030.

Source: McKinsey-Automotive revolution—perspective towards 2030. January 2016

As for autonomous commercial vehicles, while many fewer trucks are built annually than cars, autonomous trucks will radically change cargo transport and the use of public highways. One autonomous cargo transport technique is known as truck platooning. In truck platooning, a human driver in a tractor-trailer truck leads a convoy of autonomous tractor-trailer trucks, enabling a single driver to move much more cargo. Automated driver support systems allow each autonomous truck to follow the truck in front of it at a set distance. The human driver can drop off the follower trucks and pick up new ones at predetermined locations along a highway.

Autonomous vehicles also include those that use rail. Autonomous freight trains will move cargo with a human acting as an observer.

Autonomous Robots

Otto the autonomous snowplow is an autonomous robot developed at L-SPARK, Canada's largest SaaS accelerator, with advice and software from BlackBerry QNX.

In agriculture, the idea of fully autonomous tractors has engaged generations of farmers, but currently autonomous tractors require a supervising operator. Other autonomous systems used on farms include automatic milking machines and strawberry-picking robots.

In the medical field, robots assist surgeons in performing high-precision procedures, such as coronary artery bypass and cancer removal.

High-mobility autonomous systems, such as four-legged walking robots, can navigate obstacles, access difficult-to-reach locations and perform tasks hazardous to humans. Examples include industrial inspection robots and rescue mission robots.

Autonomous Warehouse and Factory Systems

Autonomous Drones

Unmanned aerial vehicles, known as UAVs or drones, are small self-piloting autonomous aircraft. Drones have long been used for reconnaissance, surveying, asset inspection and environmental studies. Two common uses for drones are agriculture and oil well inspection.

Autonomous UAV technology has its basis in the autopilot technology used by passenger airplane captains in commercial aircraft. Fully autonomous (pilotless) passenger aviation remains a distant goal. Similarly, un-crewed UAVs are usually piloted remotely rather than directing their own flight paths. In UAVs, two tasks commonly performed by fully autonomous systems are airborne refueling and ground-based battery switching.

Sensors and Sensor Fusion

Sensors Used in Autonomous Systems

Sensors help an autonomous system determine its location, identify informational signs, and avoid obstacles and other hazards. The following describes common types of sensors and their purposes and explains how the use of multiple sensors can overcome a weakness of one type of sensor by pairing it with another one with different strengths and weaknesses.

- Camera sensors: Video and still-image cameras are common types of optical sensors. Multiple cameras can provide a 360° view of the environment, which is vital for identifying cars, pedestrians, traffic signs and signals, guardrails and road markings in a traffic setting. Camera sensors are equally valuable for autonomy in other environments, such as warehouses and stores.

- Ultrasonic sensors: Ultrasonic sensors use high frequency sound waves to estimate distances between the sensor and an object. Ultrasonic sensors are typically used in short-distance applications, such as a parking assistance system in a car.

- Infrared sensors: Infrared sensors provide images under low-lighting conditions, such as those in night-vision systems.

- LiDAR sensors: LiDAR sensors use lasers to estimate distance and create high-resolution 3D images of the environment. Rotating LiDAR systems that offer a full 360° view around an autonomous device are cost-prohibitive for mass-market use. However, some cheaper solid state LiDAR systems that offer a smaller range of view are feasible for mass deployment.

- Radar sensors: Using short-range (24 GHz) and long-range (77 GHz) radio waves, radar sensors monitor blind spots in autonomous vehicles for safer distance control and braking assistance applications.

Unlike image sensors and LiDAR, radar sensors are unaffected by weather conditions.

Radar sensors are often paired with cameras to achieve image quality that is closer to the high-resolution images provided by LiDAR.

How Radar and LiDAR Compare:

| Not applicable | Radar | Rotating LiDAR |

|---|---|---|

|

Source of name

|

Radio detection and ranging

|

Light detection and ranging

|

|

Types of waves

|

Radio waves

|

Light waves from laser beams

|

|

When invented

|

1930s—detect hostile aircraft

|

1960s—Apollo moon missions

|

|

Image quality

|

May lack detail to identify objects

|

3D monochromatic image

|

|

Spatial resolution

|

Even high-resolution radar is much lower resolution than LiDAR

|

Good at detecting small objects, such as the hand signals of a bicyclist

|

|

Distance

|

Longer, allowing more time for reaction

|

Shorter, 30-200 meters

|

|

Reliability

|

More reliable due to solid state components

|

Less reliable due to more moving parts

|

|

Velocity

|

Accurately determines relative traffic speed

|

Poor detection of the speed of other vehicles

|

|

Field of view

|

Loses sight around curves

|

360° view and can track direction of movement

|

|

Object identification

|

Imprecise. May confuse multiple smaller objects as one larger object.

|

Precise. Can detect which direction a pedestrian is facing.

|

|

Effect of weather

|

Unaffected by snow, fog, rain and dusty wind

|

Impaired by snow, fog, rain and dusty wind

|

|

Power requirements

|

Less power

|

More power

|

|

Price

|

$50-200

|

$7,500

|

|

Size

|

Small

|

Large

|

|

Best uses

|

Low-speed vehicles, such as an autonomous plow, and car parking assistance systems

|

Vehicles that travel at higher speeds

|

Table 1: Advantages and disadvantages of radar and LiDAR.

- Accelerometer sensors: Accelerometers measure changes in speed. Accelerometers are used for orientation, slope and collision detection.

- Gyro sensors: Gyro sensors measure orientation and angular velocity. A gyro sensor uses a spinning disk that pivots and self-orients with respect to Earth’s gravity. Gyro sensors help maintain direction in applications such as tunnel mining and aerospace.

- IMU sensor: An inertial measurement unit (IMU) uses gyro sensors and accelerometers to measure position and orientation.

- Inertial navigation system: An inertial navigation system (INS) includes an IMU and is used to estimate vehicle position, orientation and speed.

- Global positioning system (GPS) receiver: A GPS receiver is a type of sensor that identifies the position of the system in its local environment through satellite triangulation. GPS receivers are used with maps to estimate position, direction and speed.

Wireless Vehicle Communications

In addition to sensors on an autonomous system, machines in the environment can also provide information to the system. Autonomous vehicles will pass information to nearby systems and vice versa. Vehicle-to-everything (V2X or V2E) communication between a vehicle and its environment improves safety by enabling communication with the following types of systems:

- Vehicle-to-device (V2D): onboard devices within the vehicle

- Vehicle-to-grid (V2G): plug-in electric vehicles

- Vehicle-to-infrastructure (V2I): smart traffic signals and connected parking spaces

- Vehicle-to-network (V2N): services ranging from cooperative intelligent transport systems (C-ITS) to advanced driver assistance systems (ADAS) to OEM telematics and aftermarket services

- Vehicle-to-pedestrian (V2P): roadway signaling and alerts to pedestrian smartphones

- Vehicle-to-vehicle (V2V): speed and position of other vehicles for collision detection and avoidance

Such communication is achieved with one of two technologies: 5G or dedicated short-range communication (DSRC) using the IEEE Wireless Access in Vehicular Environments (WAVE) 802.11p standard. Current LTE cellular technology does not allow for peer-to-peer communication which is necessary for V2V or V2P. In the future, 5G vehicle-to-everything communication (C-V2X) will make cars smarter and driving safer.

Sensor Fusion

Sensor fusion combines data from multiple types of sensors to produce a more accurate picture of the environment.

To perceive and understand the sensor data, the system must filter disparate data sources, localize data assets, interpret those assets and classify data. Sensor fusion algorithms—such as the Kalman filter, Bayesian networks and convolutional neural networks—help the autonomous system ensure that it is working to extract the maximum possible information from the sensor data.

- The Kalman filter is used for navigation and tracking to predict and then update the system’s prediction about the future location and state of a moving object.

- Bayesian networks provide a probabilistic computational model for decision-making in the presence of uncertainty.

- Convolutional neural networks are used in machine learning for pattern recognition.

Software for Autonomous Systems

Building safe, secure and reliable autonomous systems begins with the architecture, then the design, and then the software foundation. In this section, you’ll learn about autonomous systems architecture, functional safety systems, the future of autonomous system software, a safe software foundation and the many security considerations for autonomous systems.

The growth of autonomous systems will involve a transition from hardware-driven equipment to software-driven electronics, regardless of whether the autonomous system is a snowplow or a surgical system. The software requirements for real-time control, safety, security and reliability are similar across industries. At BlackBerry QNX, we provide the operating system or software foundation for developers facing the myriad challenges of building safe, secure and reliable software for use in a wide variety of autonomous systems.

Autonomous System Software Architecture

| Sense | Perceive and Understand | Decide | Act |

|---|---|---|---|

|

Gather information from sources at hand:

|

Data Organization:

|

Decide next actions based on classified data and plan:

|

When safe, perform action(s)

|

|

• Sensors

|

• Filter disparate data sources

|

• Route planning

|

|

|

• Maps

|

• Localize data assets

|

• Trajectory planning

|

|

|

• Cameras

|

• Interpret assets

|

• System health monitoring

|

|

|

• External communications

|

• Classify data

|

• Motion planning

|

|

|

• GPS

|

• Determine physical dynamics of planned actions

|

||

|

• External data sources (weather, terrain conditions)

|

|||

|

• Acoustics

|

| Perceive and Understand | |

|---|---|

| Gather information from sources at hand: |

Data Organization:

|

| • Sensors |

• Filter disparate data sources

|

| • Maps |

• Localize data assets

|

| • Cameras |

• Interpret assets

|

| • External communications |

• Classify data

|

| • GPS | |

| • External data sources (weather, terrain conditions) | |

| • Acoustics | |

| Decide | |

|---|---|

| Gather information from sources at hand: |

Decide next actions based on classified data and plan:

|

| • Sensors |

• Route planning

|

| • Maps |

• Trajectory planning

|

| • Cameras |

• System health monitoring

|

| • External communications |

• Motion planning

|

| • GPS |

• Determine physical dynamics of planned actions

|

| • External data sources (weather, terrain conditions) | |

| • Acoustics | |

| Act | |

|---|---|

| Gather information from sources at hand: |

When safe, perform action(s)

|

| • Sensors | |

| • Maps | |

| • Cameras | |

| • External communications | |

| • GPS | |

| • External data sources (weather, terrain conditions) | |

| • Acoustics | |

Table 2: High-Level Considerations for Autonomous Software System Design

Functional Safety Systems

Unsafe autonomous systems are a grave concern of consumers, governments and industry watchers. It may not be fair, but autonomous systems are held to a higher standard of safe behavior than humans. That is, they must prove a better safety record than humans or they will not be accepted by the public.

In passenger vehicles, safety features such as lane-departure warnings and collision detection, and active-control features such as automatic braking and lane-keep assist, must always work. In comparison, the public is willing to accept mistakes from human drivers.

People are willing to accept that humans make mistakes, but autonomous systems are held to a higher standard.

Abstraction

Autonomous systems today are propriety networks of systems from multiple suppliers integrated by a system developer, which makes it difficult for developers to scale software and limits the availability of third-party after-market applications.

In the future, developers will interact with autonomous systems via a software platform that abstracts the hardware, abstracts the sensors and pushes the interface to a higher-level set of software services via an application programming interface (API). This will free developers from having to interact with a specific type of LiDAR, radar or camera in use—and enable them to simply request information via high-level services provisioned with APIs. Connectivity will be similarly abstracted, so the communications within the car, with other vehicles and with the cloud will be simpler for a developer to implement without requiring a deep understanding of a specific communication technology.

In the future, a developer will call an API to get information from a car, without needing to understand the underlying hardware.

Software Foundation for Safe Systems

At the core of any safety-critical autonomous system is a safe, reliable and secure software foundation or operating system. The following components provide the building blocks for safe autonomous systems.

- RTOS: A deterministic real-time operating system ensures that higher-priority tasks get the time they need, when they need it, by pre-empting lower-priority tasks.

- Hypervisor: A hypervisor provides safety and security through secure virtualized isolation—and enables secure and reliable consolidation of multiple subsystems (virtual domains) on a single system on a chip (SoC).

- Secure data communications and over-the-air updates (OTA): Autonomous systems depend on reliable data communications at every level—on the system, among systems and with the environment. Sensor data and authenticated OTA updates must arrive without interference.

- Advanced driver assistance systems (ADAS): Pre-configured frameworks for ADAS provide a sensor framework and middleware to ease the burden of sensor fusion for developers.

How BlackBerry QNX Can Help You

BlackBerry QNX Software

From surgical robots and cars to trains and traffic lights, BlackBerry® QNX® software provides the foundation for safe, reliable and secure systems:

- QNX® OS for Safety is designed specifically for mission- and safety-critical software for autonomous systems. This pre-certified RTOS reduces development time, risk and cost for autonomous system developers.

- QNX Hypervisor enables developers to partition and isolate safety-critical systems from non-safety critical systems or run multiple OS versions on one SoC, helping to ensure that critical systems will continue to run safely.

- QNX® Black Channel Communications Technology helps to ensure the safety and integrity of data during communication by encapsulating data in transit in a safety header and performing safety checks to validate it at both ends.

- ADAS and automated driving systems are a highly collaborative effort, requiring IP from an array of technology partners. This QNX platform for ADAS minimizes integration efforts and speeds development.

The QNX Neutrino RTOS is used in hundreds of millions of systems, including driverless vehicles and autonomous robots.

BlackBerry QNX Professional Services

Developing safe, reliable and secure software for mission-critical and safety-critical applications of all kinds is a core competency at BlackBerry QNX. We offer training, consulting, custom development, root cause analysis and troubleshooting, system-level optimization and onsite services for developers of a variety of embedded systems in automotive, medical, robotics, industrial automation, defense and aerospace, among other industries.

Learn more about our professional services, including safety services.

Industry Leadership

The BlackBerry QNX Autonomous Vehicle Innovation Centre (AVIC) helps developers of software for connected and self-driving vehicles through production-ready software developed independently and in collaboration with partners in the private and public sectors.

Additionally, as part of a program with L-SPARK, Canada’s largest software-as-a-service accelerator, BlackBerry QNX helps companies research and develop product prototypes in areas such as robotics, device security, sensor fusion (e.g. LiDAR, radar, cameras and GPS), functional safety, analytics, medical devices and autonomous vehicles.

Check Out Our Other Ultimate Guides